Advertisement

OpenAI has launched Sora, a powerful text-to-video model poised to reshape content creation. By generating coherent videos from simple text prompts, Sora aims to challenge industry norms. It combines visual storytelling with deep learning to produce remarkably precise and detailed results. Sora positions itself ahead of major AI competitors as creators seek faster, smarter tools.

Its simple interface and high-quality output make it ideal for teachers, marketers, and filmmakers. The technology also signals broader shifts in how video is created and conceptualized. While competitors rush to catch up, Sora highlights OpenAI's commitment to innovation. Advanced text-to-video AI increasingly drives modern content creation, exemplified by OpenAI's Sora.

Built on OpenAI's robust transformer-based architecture designed specifically to create premium films from text, Sora can interpret rich cues and precisely convert them into dynamic images. The system captures intricate details such as scene transitions, depth, motion, and camera angles. It ensures every frame aligns with the emotional tone and narrative of the input. Sora reduces flicker and distortions by maintaining greater consistency across frames and over time compared to previous models.

The model adapts to various artistic styles, from animation to photorealism. Its reliability for professionals comes from its ability to maintain object fidelity across scenes. Large-scale training data and self-supervised learning are the foundations of the text-to-video engine. It acknowledges human action, environmental setting, and even physical contact. Fast inference times let users create short films or product videos in only minutes. Its possible applications cover entertainment, education, advertising, and scientific visualization.

Sora enters a competitive field populated by Runway, Pika Labs, and Google's Imagen Video products. Despite each unique feature, Sora leads in visual coherence and rapid rendering fidelity. Runway excels at style transfer and real-time editing but struggles with long-form video generation. Pika Labs has restrictions in realism and character continuity yet allows seamless transitions. Google's Imagen Video lacks Sora's exact control over scene dynamics but does well at rendering natural aspects.

Sora distinguishes itself with its end-to-pipe combination of spatial design, motion, and auditory cues. It generates narrative flow and better grasps parts of storytelling. Less technological procedures mean more user control. While rivals rely on post-processing, Sora creates polished work all at once. The approach's adaptability also gives it an advantage across sectors. It maintains quality from social media snippets to movie shorts. With one package providing speed, dependability, and originality, Sora pushes competitors.

From entertainment to marketing e-commerce education, Sora creates fresh opportunities. Filmmaking speeds up scenario preparation and idea visualization. Within hours, not weeks, directors can translate texts into pictures. Marketers use Sora to produce interesting commercials depending on seasonal themes or product attributes. It consistently and stylishly helps brand storytelling. Teachers in the classroom can create brief films that fit their curricula. Custom visuals let students react better than generic animations.

E-commerce companies use Sora to produce affordable product demos and explainer videos. The healthcare sector leverages Sora for training programs and surgical tool visualization. By reducing production costs and complexity, Sora empowers independent creators. Instead of costly prototypes, startups can present ideas through modeled scenarios. The platform's straightforward interface implies that users do not require strong technical knowledge. Sora helps preserve context and originality in many fields while accelerating content production. It closes the distance between vision and reality.

Sora's capabilities raise legitimate concerns about deepfakes, misinformation, and artistic exploitation. To mitigate these risks, OpenAI has developed content filters, digital watermarking, and human reviews. Digital signatures embedded in generated videos ensure authenticity and enable traceability. These security mechanisms enable platforms to identify possibly dangerous outputs. The corporation also forbids explicit content or sensitive use cases, including political influence. Curated training data ensures balanced representations and helps to eliminate prejudices.

In collaboration with external experts, OpenAI audits model behavior under specific conditions. Access policies limit who can use Sora and specify acceptable use cases. For example, age-restricted content and deceptive prompts are prohibited. Public deployment features tools for openness and opt-in systems. Developers get direction on moral behavior and conscientious invention. These steps mirror OpenAI's continuous dedication to creating responsible and safe AI. Though problems still exist, the framework establishes a standard for ethical use in the generative video terrain.

Sora will certainly shape media production and platform interaction in the future. Demand for quick and creative tools increases as video takes the front stage in communication. Sora increases content quality while cutting manufacturing cycles. Companies turn to it to increase brand narrative and marketing speed. With Sora's guidance, education systems fund vibrant learning modules. Social media creators find fresh approaches to playing with formats and viral images.

Integration with other OpenAI models could improve editing, narrative, and scriptwriting. Sora might develop into a full-stack content suite run under multimodal artificial intelligence. Analyzers and investors view Sora as a driver in the explosion in artificial intelligence video markets. Rivals might adopt such models or look for alliances to stay current. Sora's release signals a turning moment rather than only a tool. It shows how developed text-to-video artificial intelligence will transform digital storytelling, corporate outreach, and creative innovation.

Sora embodies OpenAI's goal of leading the AI-driven video revolution. It redefines what artificial intelligence can achieve with strong visuals, rapid processing, and ethical safeguards. The technology helps producers—businesses, artists, and teachers—create engaging material quickly and consistently. Its success will likely drive broader adoption and compel competitors to innovate. Sora represents a significant advancement in response to the growing demand for better creative tools. OpenAI Sora video generator, AI-generated video model, and advanced text-to-video AI anchors the change toward next-generation visual storytelling.

Advertisement

Discover how to generate enchanting Ghibli-style images using ChatGPT and AI tools, regardless of your artistic abilities

Need to convert a Python list to a NumPy array? This guide breaks down six reliable methods, including np.array(), np.fromiter(), and reshape for structured data

Meta's scalable infrastructure, custom AI chips, and global networks drive innovation in AI and immersive metaverse experiences

How DeepSeek LLM: China’s Latest Language Model brings strong bilingual fluency and code generation, with an open-source release designed for practical use and long-context tasks

RAG combines search and language generation in a single framework. Learn how it works, why it matters, and where it’s being used in real-world applications

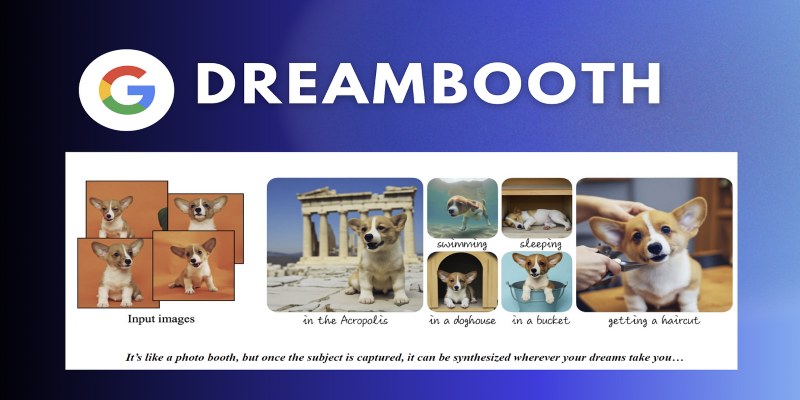

Learn how DreamBooth fine-tunes Stable Diffusion to create AI images featuring your own subjects—pets, people, or products. Step-by-step guide included

Discover how OpenAI's Sora sets a new benchmark for AI video tools, redefining content creation and challenging top competitors

AWS launches AI chatbot, custom chips, and Nvidia partnership to deliver cost-efficient, high-speed, generative AI services

Understand how the Python range() function works, how to use its start, stop, and step values, and why the range object is efficient in loops and iterations

Explore key features, top benefits, and real-world use cases of OpenAI reasoning models that are transforming AI in 2025.

How Direct Preference Optimization improves AI training by using human feedback directly, removing the need for complex reward models and making machine learning more responsive to real-world preferences

Find how Flux Labs Virtual Try-On uses AI to change online shopping with realistic, personalized try-before-you-buy experiences