Advertisement

The way we process language with machines has often followed two tracks: retrieval and generation. One pulls from a fixed base of documents; the other creates new responses from training data. Until recently, these methods worked in parallel but not in harmony. Retrieval was your go-to for fact-based outputs. Generation handled language fluency and creativity. Then came Retrieval-Augmented Generation — RAG — a system that merges both into one integrated framework.

This isn't just a minor tweak to existing models. It rethinks how information is pulled, processed, and then expressed. For developers and researchers working with natural language processing (NLP), this shift brings accuracy, context awareness, and flexibility in ways that weren't practical before.

What separates RAG from earlier models is the way it blends retrieval and generation into a single loop. Rather than working from a memorized base of knowledge, it actively pulls in relevant information from external sources while generating the response. This isn't about adding a search step before writing. It's about building a system where generation depends on what's retrieved, and retrieval is guided by what the model is trying to write.

Say someone asks, “What are the health benefits of turmeric?” A regular generative model tries to recall what it saw during training and predict an answer. RAG works differently. It first searches for relevant documents — articles, passages, summaries — that speak directly to the question. It then writes an answer while referencing this material in real-time. That structure allows the response to stay grounded in facts, not guesses.

What’s more, RAG doesn’t require retraining every time new information needs to be included. You just update the retrieval index — the model continues pulling from the most current data. It’s adaptable without being dependent on fine-tuning.

To understand the mechanics behind RAG, it helps to break down its process into clear steps:

The model starts by converting the input into a dense vector using a query encoder. This vector represents the meaning of the input in a mathematical form. It’s what allows the system to find documents that are contextually related, not just keyword matches.

Using the encoded input, the system searches a document database or knowledge index. It returns a set of passages that closely match the meaning of the original query. These passages can come from internal documents, external databases, or even a live feed.

Each retrieved passage is then turned into its own encoded format. This makes it compatible with the generation model. Instead of treating the information as background noise, RAG directly integrates these chunks into its response process.

Finally, the model writes a response. But instead of guessing from memory, it uses the retrieved passages as live references. The generator attends to both the original query and the retrieved material. Every word is shaped by this combination of sources, which helps the final answer stay aligned with real-world facts.

RAG isn't just useful because it improves responses — it changes the way systems are built and maintained.

Traditional language models need retraining to stay current. RAG doesn’t. When the underlying knowledge changes, you don’t touch the model itself. You just update what it pulls from. That means lower overhead, faster adjustments, and a better fit for fast-moving fields like healthcare, law, and research.

One advantage of RAG’s setup is its transparency. Because the model pulls from actual documents, it’s easy to see where an answer came from. If a user questions the response, developers or users can track it back to the original source. This helps build confidence in the system and makes it easier to fix errors or improve content coverage.

In domains where precision matters — medical guidance, technical documentation, financial analysis — you can’t afford vague responses. Pure generation may offer fluent language, but it often misses key facts. Retrieval adds context, and when paired with generation in RAG, it produces language that’s both accurate and clearly expressed.

The combined strength of looking up relevant data and generating smooth responses offers a middle ground. It avoids the blandness of pre-written templates and the inaccuracy of language models guessing from memory.

In actual deployments, RAG fits well where content needs to be generated on demand but anchored in current or specialized knowledge.

Imagine a support chatbot connected to a product’s documentation. With every question a user asks, the system retrieves the most recent articles and answers based on them. There's no need to hard-code updates into the model — just keep the help documents fresh, and the model stays useful.

Students can input questions that require more than basic definitions — questions with nuance, context, or multiple viewpoints. RAG finds matching study material and uses it to form detailed yet clear answers. The experience becomes more conversational and less robotic.

Legal teams can ask for summaries or clarifications on rules, statutes, or filings. RAG fetches the most relevant sections and forms a well-structured explanation based on official documents. The risk of errors from memorized data drops significantly.

In clinical settings, RAG can assist professionals by pulling from current research papers, medical guidelines, and health databases to provide informed responses. This blend of real-time lookup and fluent generation is especially helpful when accuracy is non-negotiable.

RAG reshapes the relationship between retrieval and generation. Instead of making them separate steps, it builds a loop where each informs the other. Responses aren't based on outdated memory or static knowledge — they're grounded in data that's pulled fresh each time.

By connecting the strengths of retrieval and the fluency of generation, RAG offers a smarter way to build language systems. It keeps things current, transparent, and flexible — all while writing responses that sound natural and stay on-topic.

It’s not just about getting better answers. It’s about building models that know when to look something up — and how to use it once they do.

Advertisement

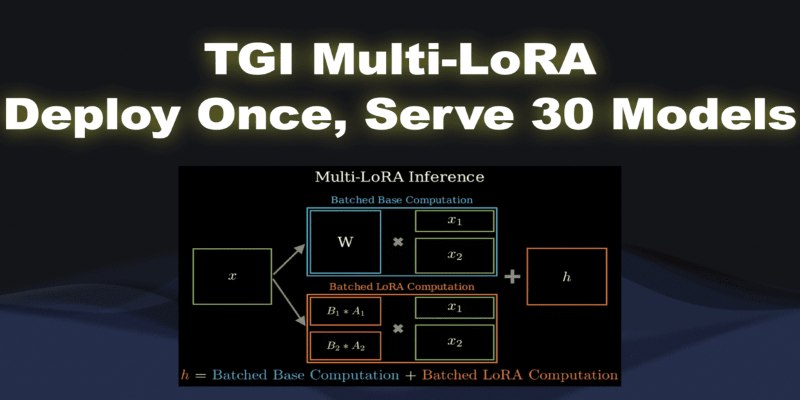

What if you could deploy dozens of LoRA models with just one endpoint? See how TGI Multi-LoRA lets you load up to 30 LoRA adapters with a single base model

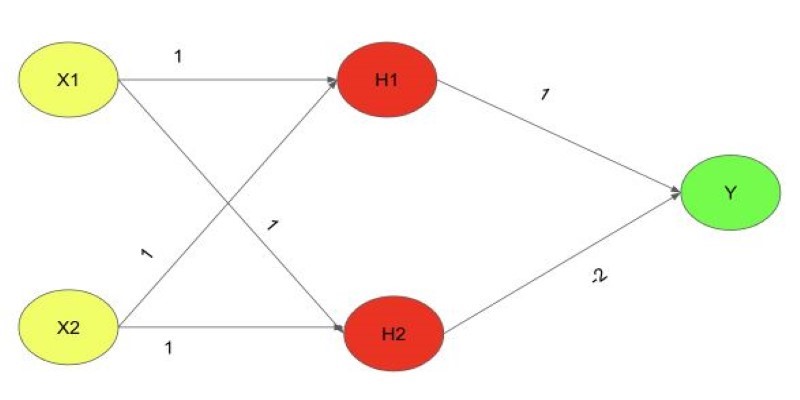

Explore the XOR Problem with Neural Networks in this clear beginner’s guide. Learn why simple models fail and how a multi-layer perceptron solves it effectively

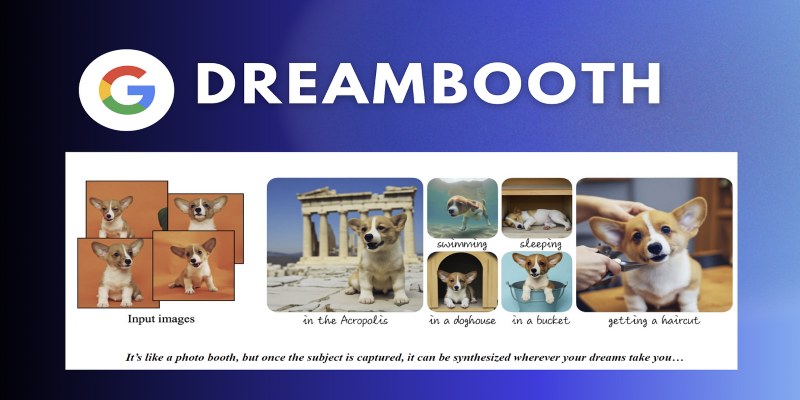

Learn how DreamBooth fine-tunes Stable Diffusion to create AI images featuring your own subjects—pets, people, or products. Step-by-step guide included

How DeepSeek LLM: China’s Latest Language Model brings strong bilingual fluency and code generation, with an open-source release designed for practical use and long-context tasks

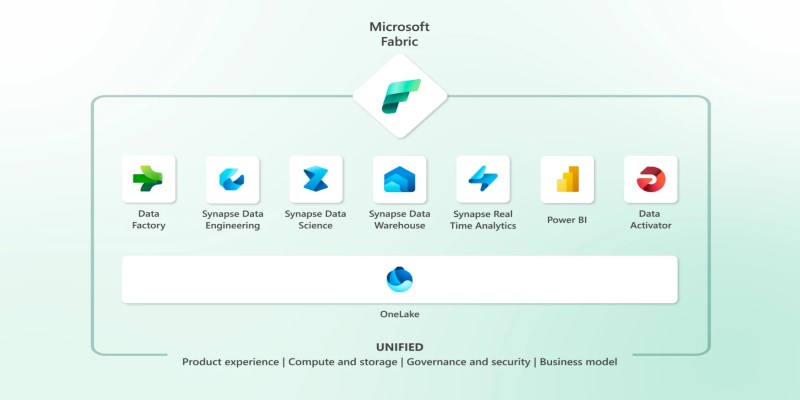

Explore Microsoft Fabric, a unified platform that connects Power BI, Synapse, Data Factory, and more into one seamless data analytics environment for teams and businesses

Reddit's new data pricing impacts AI training, developers, and moderators, raising concerns over access, trust, and openness

Transform any website into an AI-powered knowledge base for instant answers, better UX, automation, and 24/7 smart support

How Python’s classmethod() works, when to use it, and how it compares with instance and static methods. This guide covers practical examples, inheritance behavior, and real-world use cases to help you write cleaner, more flexible code

Need to convert a Python list to a NumPy array? This guide breaks down six reliable methods, including np.array(), np.fromiter(), and reshape for structured data

How to create Instagram Reels using Predis AI in minutes. This step-by-step guide shows how to turn ideas into high-quality Reels with no editing skills needed

Gemma 3 mirrors DSLMs in offering higher value than LLMs by being faster, smaller, and more deployment-ready

How Direct Preference Optimization improves AI training by using human feedback directly, removing the need for complex reward models and making machine learning more responsive to real-world preferences