Advertisement

Generative AI has made incredible strides in just a few years, with Large Language Models (LLMs) like GPT grabbing all the attention. But now, a new wave of AI models called Deep Structured Language Models (DSLMs) is starting to shake things up, challenging the long-standing reign of traditional LLMs. These innovative models not only promise smarter outputs but also bring enhanced efficiency, transparency, and practical usability to the table. Whether it's for business applications or eco-friendly initiatives, DSLMs are showing their value across various fields.

In this article, we’ll dive into how DSLMs are outpacing LLMs in terms of technical prowess, economic benefits, and ethical considerations—and why they could be the key to the future of generative AI.

Deep Structured Language Models (DSLMs) are the next big thing in generative AI, designed to tackle the shortcomings of traditional Large Language Models (LLMs). While LLMs often struggle with their sheer size and data demands, DSLMs utilize structured data and hierarchical logic to enhance context comprehension, efficiency, and training speed. These models aim to mimic human reasoning patterns more closely, making them more flexible and scalable. Unlike LLMs, which can sometimes feel like black boxes, DSLMs offer a clearer architecture, a significant advantage for developers, researchers, and businesses seeking trustworthy, explainable AI.

DSLMs are demonstrating their value over LLMs due to their scalability, cost-effectiveness, and versatility. While LLMs demand hefty hardware and data resources, DSLMs can deliver impressive performance with optimized computing. Their structured approach reduces redundancy and enhances output accuracy in real-world scenarios. In business settings, DSLMs are easier to fine-tune for specific applications, making them a smarter long-term investment. Additionally, they require less retraining, resulting in lower operational costs. All these factors make DSLMs not just technologically advanced but also a more economical choice for businesses, especially those in need of customized AI solutions.

DSLMs excel in practical situations where structured reasoning, contextual relevance, and computational efficiency are crucial. In fields such as healthcare, legal research, or customer service, DSLMs produce more consistent and exact results than LLMs. They follow particular corporate guidelines, decode organized prompts better, and lower hallucinations—a major LLMs problem. By overfitting large datasets, LLMs could produce more generic, verbose, or erroneous answers. When quality, rather than the quantity of data and processing, is the aim, DSLMs shine.

DSLMs bring multiple novel ideas to the table that set them apart from earlier LLMs. These comprise semantic data processing layers, hybrid training approaches, and modular architectures. Unlike LLMs, which depend on extensive end-to-end deep learning, DSLMs mix statistical learning with symbolic reasoning and hierarchical knowledge systems. The model can utilize this to break down complex assignments into smaller, manageable units. Additionally, DSLMs employ more intelligent architectural design and less brute force, thereby enhancing performance even on less powerful hardware.

One of the main criticisms leveled at LLMs is their hefty environmental impact. Training these large models requires a significant amount of energy and a substantial computing setup. On the other hand, DSLMs are designed with sustainability at their core. Their compact and organized architecture allows for quicker training and lower energy consumption. This efficiency also means they rely less on extensive hardware, making them a viable option for smaller organizations. As the tech world increasingly prioritizes sustainability, DSLMs present a forward-thinking alternative to the energy-guzzling LLMs.

In today's landscape, transparency and the development of ethical AI are more critical than ever. DSLMs enhance auditability by using more interpretable layers and decision paths. Unlike the black-box nature of LLMs, which can be a mystery even to their developers, DSLMs offer traceable logic that aids in debugging, compliance, and ethical assessments. This makes them a better choice for industries with strict regulatory requirements. They also facilitate more transparent model governance, allowing teams to implement safeguards and rules more effectively.

DSLMs mark a significant shift in our perspective on generative AI. By prioritizing structure, efficiency, and interpretability, they address the shortcomings of large, unwieldy large language models (LLMs). As the AI landscape evolves, the measure of value will shift from solely the size of the model to factors such as reliability, speed, sustainability, and ease of integration. DSLMs are set to become the preferred choice in the industry for meeting these demands. While they're not without their flaws, they represent a more intelligent, streamlined approach to AI—one that truly delivers value where it matters most.

Advertisement

Discover how to generate enchanting Ghibli-style images using ChatGPT and AI tools, regardless of your artistic abilities

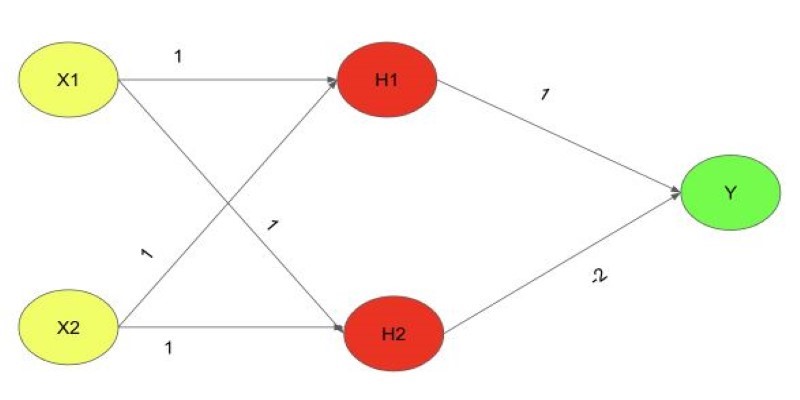

Explore the XOR Problem with Neural Networks in this clear beginner’s guide. Learn why simple models fail and how a multi-layer perceptron solves it effectively

Meta's scalable infrastructure, custom AI chips, and global networks drive innovation in AI and immersive metaverse experiences

How to create Instagram Reels using Predis AI in minutes. This step-by-step guide shows how to turn ideas into high-quality Reels with no editing skills needed

Transform any website into an AI-powered knowledge base for instant answers, better UX, automation, and 24/7 smart support

How Python handles names with precision using namespaces. This guide breaks down what namespaces in Python are, how they work, and how they relate to scope

How Python’s classmethod() works, when to use it, and how it compares with instance and static methods. This guide covers practical examples, inheritance behavior, and real-world use cases to help you write cleaner, more flexible code

How Direct Preference Optimization improves AI training by using human feedback directly, removing the need for complex reward models and making machine learning more responsive to real-world preferences

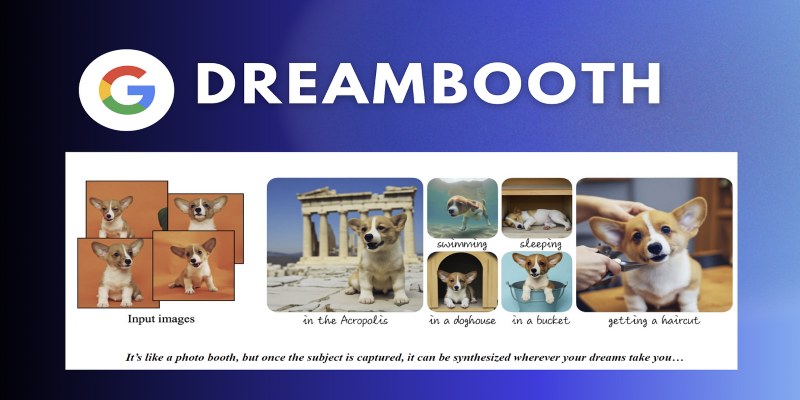

Learn how DreamBooth fine-tunes Stable Diffusion to create AI images featuring your own subjects—pets, people, or products. Step-by-step guide included

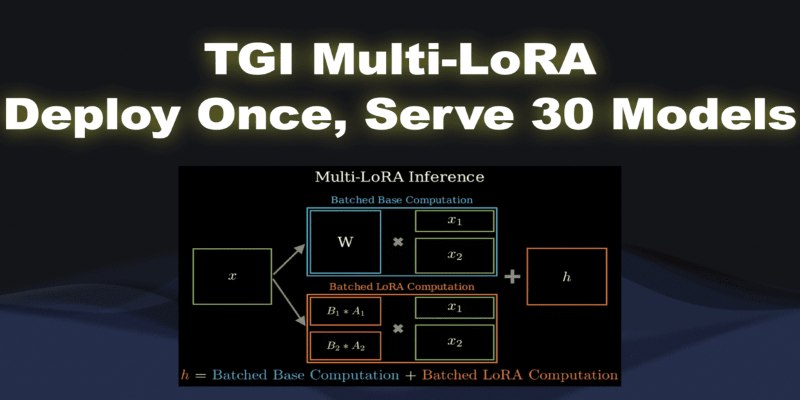

What if you could deploy dozens of LoRA models with just one endpoint? See how TGI Multi-LoRA lets you load up to 30 LoRA adapters with a single base model

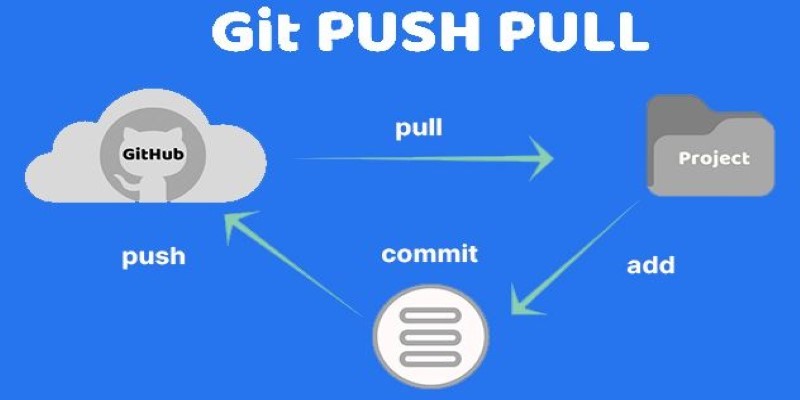

Still unsure about Git push and pull? Learn how these two commands help you sync code with others and avoid common mistakes in collaborative projects

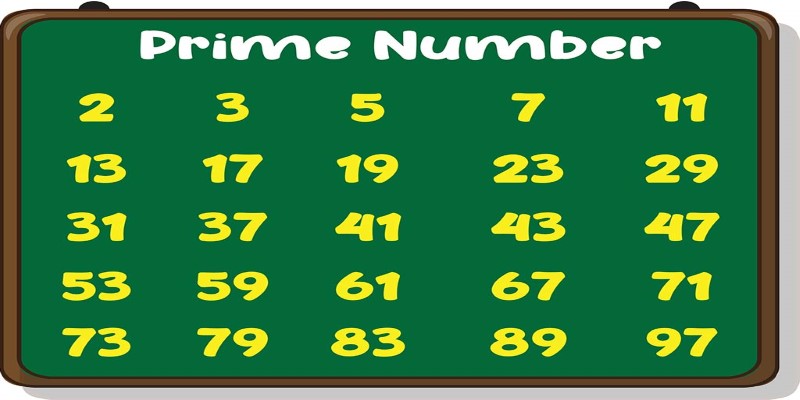

Learn how to write a prime number program in Python. This guide walks through basic checks, optimized logic, the Sieve of Eratosthenes, and practical code examples