Advertisement

Imagine walking into a digital world and having a natural conversation with a character who responds thoughtfully, not from a script, but in real time. That's what NPC-Playground offers—a 3D environment filled with AI-driven non-player characters that speak, react, and adapt like real people. These aren't the robotic NPCs seen in typical games. They're powered by large language models (LLMs), making each interaction feel spontaneous and grounded.

NPC-Playground provides developers, educators, and curious users with a means to explore how AI characters might behave in freeform scenarios. Whether you're asking questions, roleplaying, or observing how different NPCs react, this space opens a window into the future of AI in digital interaction.

At its core, NPC-Playground is a simple 3D scene built in Unity, where users can interact with virtual characters backed by a language model, such as GPT. Instead of choosing from fixed responses, you type or speak freely, and the character answers based on its personality, background, and current context. Each NPC has a defined role, whether it's a street vendor, historian, or curious child, and the AI adapts its replies accordingly.

These characters are not truly aware, but they simulate presence well. They respond naturally to casual conversation, respond to follow-ups, and occasionally surprise you with wit or insight. You can test boundaries, go off-script, or ask philosophical questions—and the model tries to keep up.

Unlike traditional NPCs locked into pre-written lines, these characters aren't limited by scripts. Instead, prompts sent to the language model guide behaviour, tone, and knowledge. Combined with text-to-speech and animation tools, the experience feels far more organic than anything based purely on code or dialogue trees.

While the 3D world creates the illusion, most of the intelligence happens in the background. The system takes your input and sends it, along with context about the NPC’s role, to the language model. That context includes personality traits, setting details, and interaction goals. This shaping prompt is what makes a grumpy blacksmith sound different from a cheerful shopkeeper.

The model then generates a response, which can be converted into audio using text-to-speech tools. The character “speaks,” and optional animations—like hand gestures or facial changes—can be triggered based on sentiment or keywords.

It's not just about what they say but how they say it. Developers can experiment with personality tuning, adding memory within sessions, or adjusting emotional tone. The key is that all responses are generated live, meaning the dialogue feels less robotic and more responsive.

This setup creates a lightweight but flexible framework. The playground can be modified to add new scenes, NPCs, or objectives, giving creators plenty of freedom to test different interaction models. And since the heavy lifting is done by the LLM, updates can focus on character behavior rather than rewriting dialogue lines.

Traditional NPCs serve a function—they provide quests, background info, or world flavor. But they don't change much. NPC-Playground introduces the idea of characters that talk back like real people, offering deeper engagement without needing thousands of pre-written lines.

For game design, this changes how stories can be told. Writers don’t have to build branching dialogue trees. Instead, they design characters—define who they are, how they speak, what they know—and let the model generate conversations. It’s more fluid, and players are no longer boxed in by a list of dialogue options.

This has applications far beyond entertainment. In training simulations, LLM-powered NPCs can model complex interactions—like customer support or medical interviews—where unpredictability is key. In education, learners could talk with historical figures or practice language skills in a conversational setting that adjusts to their level.

There are real concerns, too. Unfiltered LLM responses can include mistakes or bias, and not every reply will make sense. Developers need systems to flag or correct unsafe output. The playground provides a safe space to test and refine these tools before larger deployment.

What’s powerful here isn’t just novelty—it’s that the experience feels unscripted. You ask something unexpected, and the AI improvises. You challenge it, and it adapts. That quality is hard to fake with traditional design approaches.

NPC-Playground is still a prototype, but it gives a clear look at what’s possible. In the near future, we’ll likely see memory persistence, meaning NPCs will recall past interactions across sessions. Emotional modeling could help shape responses not just by context, but by past tone and behavior. This would make characters feel more consistent over time.

Multiple NPCs could also talk to each other, creating dynamic scenes that evolve. Developers might script less and instead focus on tuning behaviour and personality settings. Eventually, game engines could include native tools for LLM interaction, simplifying integration.

The visual component adds weight to these conversations. Talking to a floating chatbot is one thing, but speaking to a digital person in a 3D space gives it a presence. Gestures, voice, and eye contact—even simulated—pull users in. It's easier to believe the interaction when you feel part of the environment.

That said, there’s still a long way to go. These models don’t have real memory, emotion, or self-awareness. They aren’t “thinking”—they’re reacting based on patterns. But the illusion is strong, and that’s often enough for learning, play, or engagement.

NPC-Playground isn't a full product—it's a demo that hints at a coming shift. LLM-powered NPCs are changing how we think about virtual conversation. Instead of fixed dialogue paths, we're entering spaces where characters talk like people, react in real-time, and surprise us with their responses. This doesn’t replace game writers or educators—it supports them, freeing them from rigid scripts and giving them tools to build deeper interactions. Whether in storytelling, training, or play, these AI characters are becoming part of how we interact in digital spaces. And NPC-Playground is one of the first clear examples of that future.

Advertisement

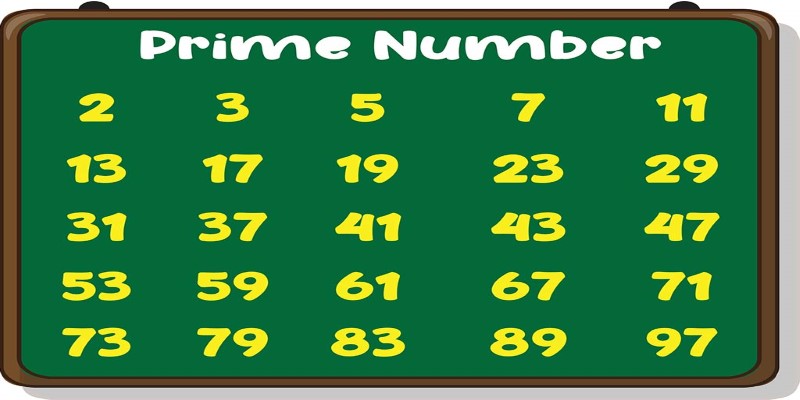

Learn how to write a prime number program in Python. This guide walks through basic checks, optimized logic, the Sieve of Eratosthenes, and practical code examples

Learn the top 7 impacts of the DOJ data rule on enterprises in 2025, including compliance, data privacy, and legal exposure.

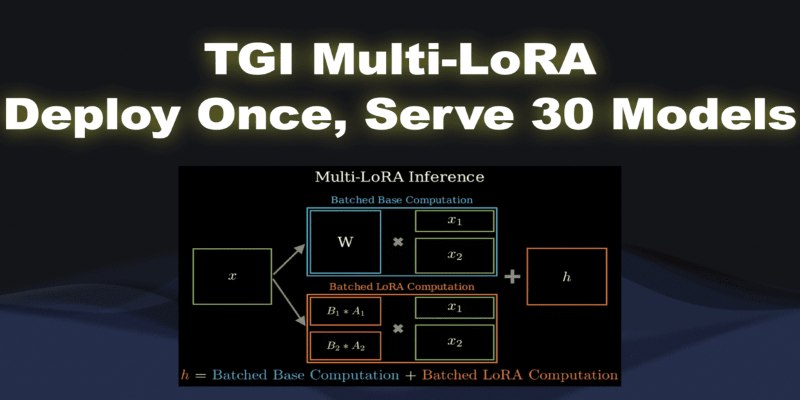

What if you could deploy dozens of LoRA models with just one endpoint? See how TGI Multi-LoRA lets you load up to 30 LoRA adapters with a single base model

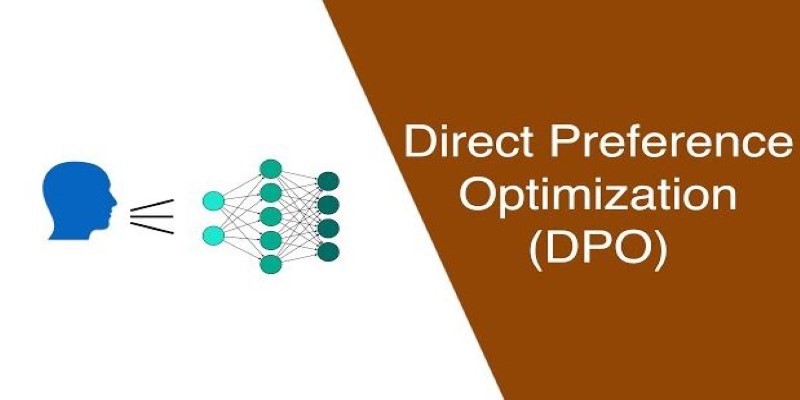

How Direct Preference Optimization improves AI training by using human feedback directly, removing the need for complex reward models and making machine learning more responsive to real-world preferences

Discover how clean data prevents AI failures and how Together AI's tools automate quality control processes.

AWS launches AI chatbot, custom chips, and Nvidia partnership to deliver cost-efficient, high-speed, generative AI services

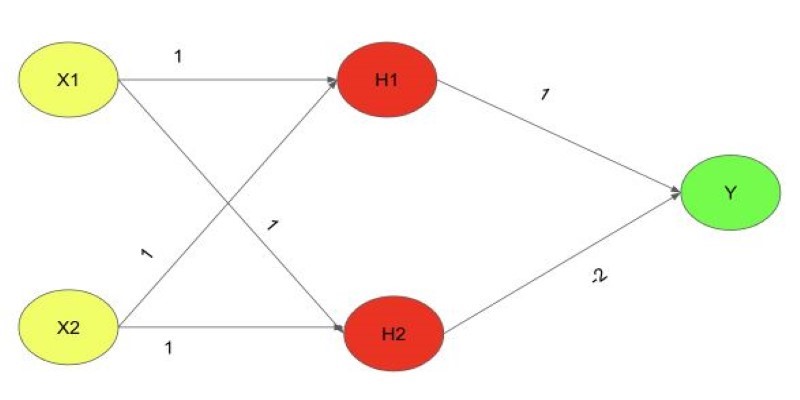

Explore the XOR Problem with Neural Networks in this clear beginner’s guide. Learn why simple models fail and how a multi-layer perceptron solves it effectively

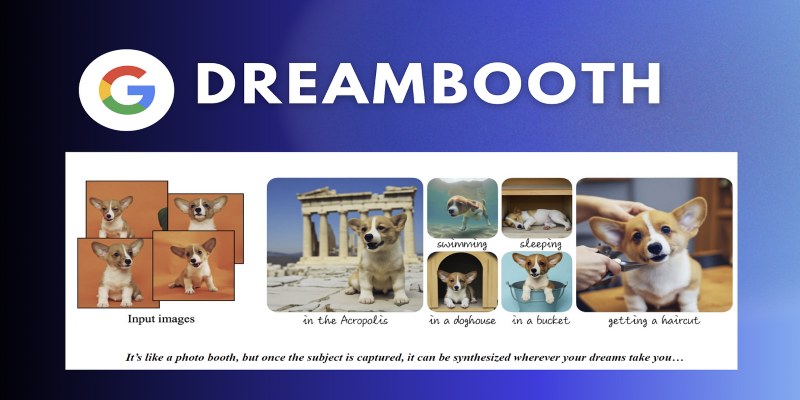

Learn how DreamBooth fine-tunes Stable Diffusion to create AI images featuring your own subjects—pets, people, or products. Step-by-step guide included

How Python’s classmethod() works, when to use it, and how it compares with instance and static methods. This guide covers practical examples, inheritance behavior, and real-world use cases to help you write cleaner, more flexible code

Reddit's new data pricing impacts AI training, developers, and moderators, raising concerns over access, trust, and openness

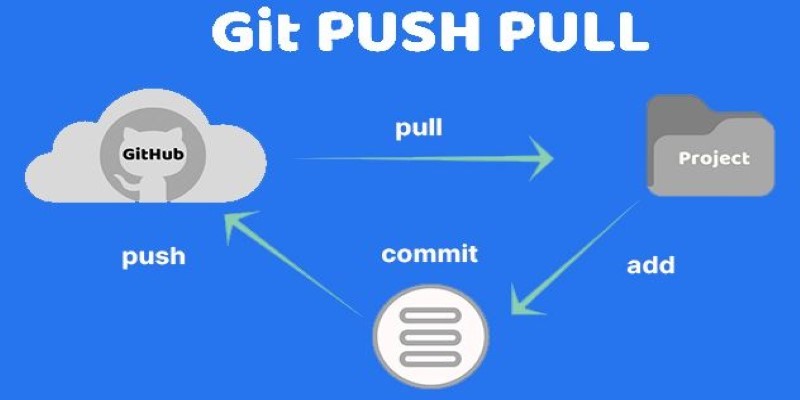

Still unsure about Git push and pull? Learn how these two commands help you sync code with others and avoid common mistakes in collaborative projects

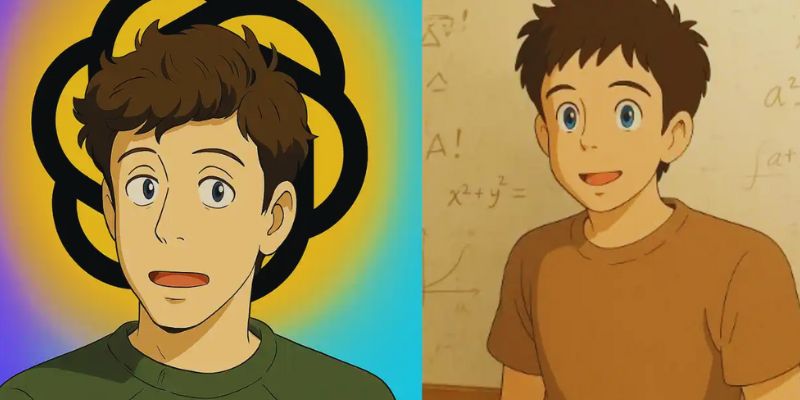

Discover how to generate enchanting Ghibli-style images using ChatGPT and AI tools, regardless of your artistic abilities