Advertisement

Everywhere you turn, there's a new tool, a new startup, a new "revolutionary" product. But here’s the catch no one likes to talk about: most of these tools are only as good as the data they’re trained on.

You’ve probably already noticed this in little ways, like when a chatbot totally misunderstands your question or an image generator gives you something that looks like a weird mashup from a bad dream. That’s bad data at work.

Enter: Together AI. It’s not just another shiny name in the AI space—it’s a team tackling this whole “data quality” issue head-on. Because if we’re going to trust machines with anything remotely important (like summarizing your notes… or, you know, driving), we need to get serious about what we’re feeding them.

So… let’s dig into why data quality matters and how Together AI is actually doing something about it.

Here’s the thing about AI—it's not magic. It doesn't "understand" the world the way we do. What it does do is recognize patterns in massive piles of data… and repeat those patterns back to us when we ask for something.

But here’s the issue—what if that data is outdated, biased, low-quality, or just straight-up wrong? The model’s going to spit out something equally broken. And that’s not just an “oops” situation. That can lead to misinformation, offensive outputs, or systems that fail in real-world use.

Think about this for a second… what if a medical AI is trained on flawed research data? Or a hiring AI is trained on biased resumes? That’s not just inconvenient—it’s dangerous. And it happens a lot more than you’d think. Data quality for AI training is so much more than misinformation now.

Okay, let’s break it down a bit more:

This is what Together AI is trying to avoid. They’re building systems that care about what goes into the training pipeline. And that’s… refreshing.

One of the smartest things Together AI is doing? They're not rushing to build the biggest model or slap a trendy interface on top of an LLM. Nope. They’re starting from the ground up—fixing the data first.

They’re building what you’d call a data-centric AI stack. Meaning? They’re obsessed with cleaning, curating, filtering, and de-duping massive amounts of data before training even begins.

Their filtering isn’t just surface-level either. They’re developing tools that look at things like:

And they’re open-sourcing a ton of it too… which is honestly kind of a big deal.

Yup. That’s part of what makes Together AI stand out in a sea of AI companies that keep their tech behind closed doors. Their philosophy? We’re all better off if everyone builds on better data.

They’ve released open datasets (like RedPajama) and made their training pipelines public. That means researchers, developers, startups, and even hobbyists can build smarter models without starting from scratch—or worse—using junky data they pulled from random corners of the web.

It's like giving the whole internet community a better foundation to build on. We need more of that energy in tech.

Let’s clear something up real quick. It’s not about just having more data. More isn’t always better. In fact, bloated datasets with too much noise can slow down training, make models dumber (yep, it’s possible), and waste a ton of computing power.

Together AI is flipping that mindset. Instead of bragging about the size of their dataset, they’re focused on data quality per token. In plain speak, every word the AI sees during training should matter. No filler. No fluff. No garbage.

We love that. That’s what sustainable, smarter AI looks like.

Now you might be thinking: “Okay cool… but I’m not training AI models. Why should I care about Together AI’s approach?”

Fair question. Here’s why:

When companies care about the quality of data, it trickles down into the tools you use every day. Better search results, smarter suggestions, less weird answers. You feel it—even if you don’t always see it.

We’ve seen the headlines—chatbots giving offensive responses, image tools generating problematic visuals, or language models “hallucinating” facts out of thin air. Most of the time, it’s not the model's fault. It’s the training data.

It didn’t learn the right thing… because it wasn’t taught the right thing.

This is why we keep coming back to data quality. It’s not just a technical thing. It’s a trust thing. If we’re going to use AI for more serious stuff—health, legal, finance, education—we need models that were raised on clean, balanced, factual, inclusive data.

That’s not a “nice to have.” It’s essential.

One of the coolest things about Together AI (and not enough people are talking about this) is their mission to decentralize AI. Meaning? They’re building infrastructure and tools that don’t require you to work at Google or OpenAI to participate.

They want everyone—academics, indie hackers, open-source devs—to be able to train and fine-tune models. Safely. Ethically. At scale.

It’s a big swing. But it could reshape the AI ecosystem for the better. More voices, more experimentation, more accountability. Less gatekeeping. We’re here for it.

Look, you don’t need to go out and build an AI model tonight (unless you want to). But understanding how and why AI works the way it does? That’s power. That’s the kind of digital literacy we need more of.

So next time you hear someone rave about how “smart” a new AI tool is… ask them what it was trained on. Ask who made it. Ask if it’s biased. These questions matter now more than ever.

And if you're choosing tools, look for ones built with care, transparency, and quality. It really does make a difference in the long run.

Advertisement

Need to convert a Python list to a NumPy array? This guide breaks down six reliable methods, including np.array(), np.fromiter(), and reshape for structured data

Meta's scalable infrastructure, custom AI chips, and global networks drive innovation in AI and immersive metaverse experiences

AWS launches AI chatbot, custom chips, and Nvidia partnership to deliver cost-efficient, high-speed, generative AI services

NPC-Playground is a 3D experience that lets you engage with LLM-powered NPCs in real-time conversations. See how interactive AI characters are changing virtual worlds

Gemma 3 mirrors DSLMs in offering higher value than LLMs by being faster, smaller, and more deployment-ready

Find how Flux Labs Virtual Try-On uses AI to change online shopping with realistic, personalized try-before-you-buy experiences

Can you really run a 7B parameter language model on your Mac? Learn how Apple made Mistral 7B work with Core ML, why it matters for privacy and performance, and how you can try it yourself in just a few steps

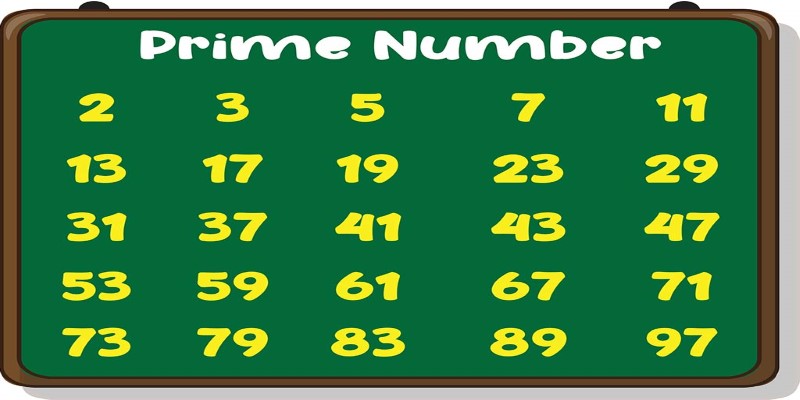

Learn how to write a prime number program in Python. This guide walks through basic checks, optimized logic, the Sieve of Eratosthenes, and practical code examples

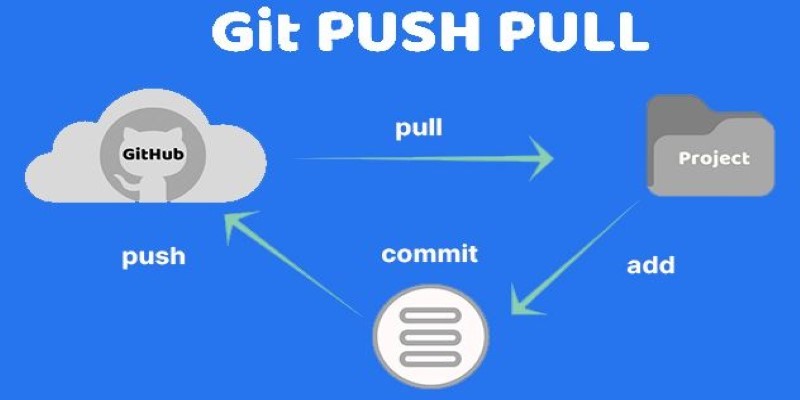

Still unsure about Git push and pull? Learn how these two commands help you sync code with others and avoid common mistakes in collaborative projects

Discover how clean data prevents AI failures and how Together AI's tools automate quality control processes.

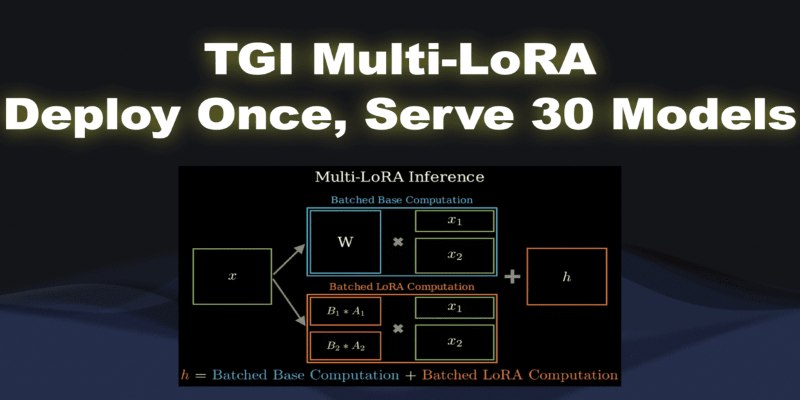

What if you could deploy dozens of LoRA models with just one endpoint? See how TGI Multi-LoRA lets you load up to 30 LoRA adapters with a single base model

Discover how OpenAI's Sora sets a new benchmark for AI video tools, redefining content creation and challenging top competitors